Mono-Stereo 3D Scanner

Second HackUMass project that totally worked

For HackUMass XII, my friends and I had the amazingly brilliant idea to make a cheap 3D Scanner using stereovision. Except there wasn’t any stereo, because we only had access to one Pi camera.

So what ended up happening? We figured that since stereovision was essentially just taking two pictures simultaneously from different angles in order to obtain depth and distance, we could try to mimick that by taking a camera and moving it around different places around whatever object was being scanned.

After some quick brainstorming, we wanted to move the camera along one axis multiple times while the object would spin on a turn table. The hope was that by using an online Stereovision OpenCV repo, each pair of images would result in multiple point clouds that could then be concatenated together. Was it a complex task to complete within 36 hours? Yes. But that was a problem for the software team, aka my friend Karl.

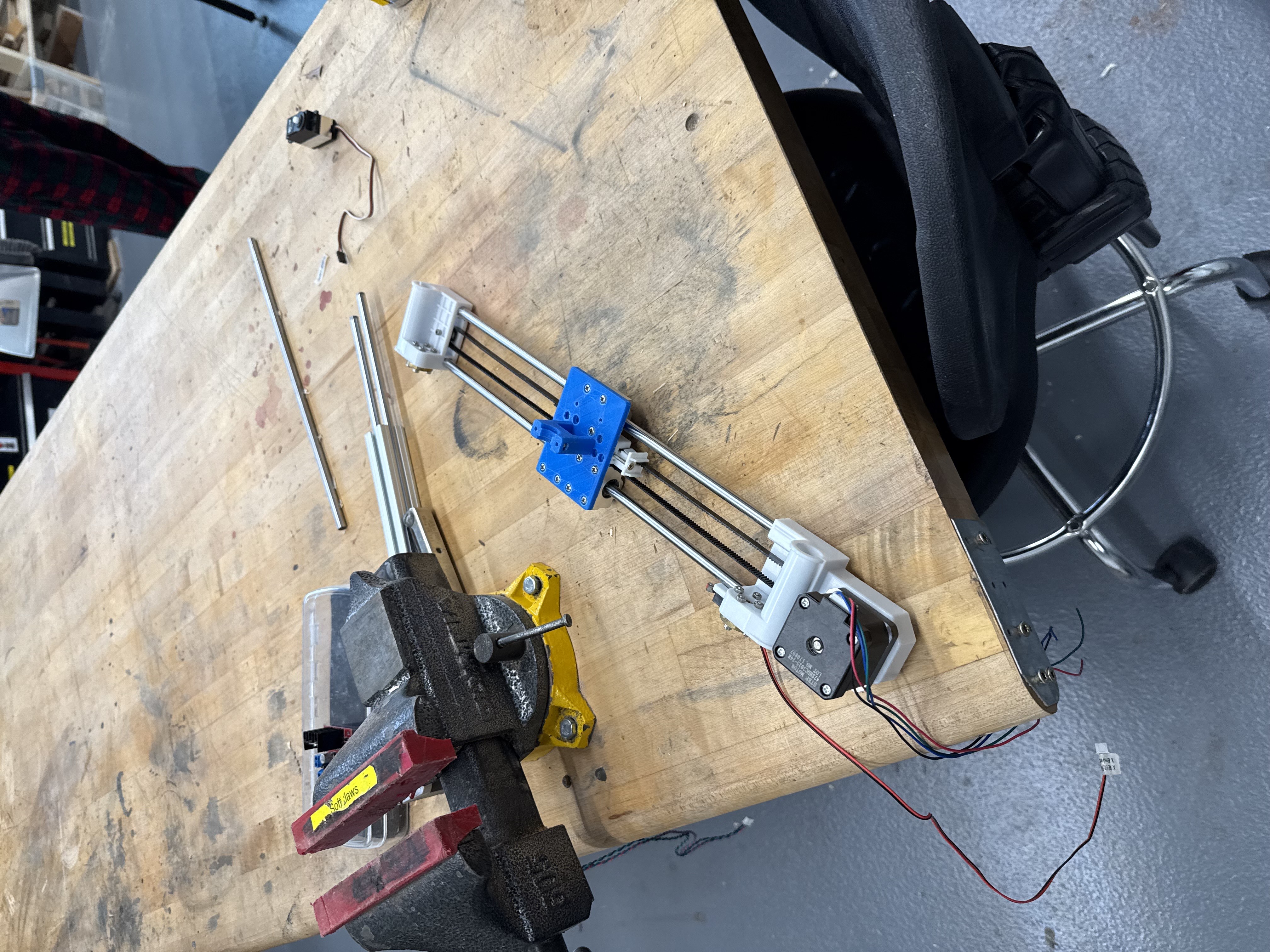

For the mechanical assembly, my friend Max and I immediately harvested a 3D Printer (thank you to UMass M5). Eventually we would repurpose the belt assembly as our platform for the camera. It looks like this:

Initially, we had a lot of trouble with the slack which we fixed with some quick tensioning. From there, we designed a rough mount for the Raspberry Pi and camera. After that, the rest of the mechanical and electrical parts were pretty trivial. We used a separate ESP32 to actuate the motor for the spin table, as well as control the stepper motor. The only slight hiccup was the fact that the motor drivers that we were using for the stepper motors were too weak, and overheated extremely fast. We switched them out for an H Bridge motor controller which seemed to work fine. To test the overall assembly, I wrote a quick script that turned the motor 250 steps in each direction. So the idea was for the camera to move 250 steps left, take a picture, move 250 steps right, take another picture, and then hopefully that would generate a point cloud. Then we would simply turn object 90 degrees and repeat. Simple enough.

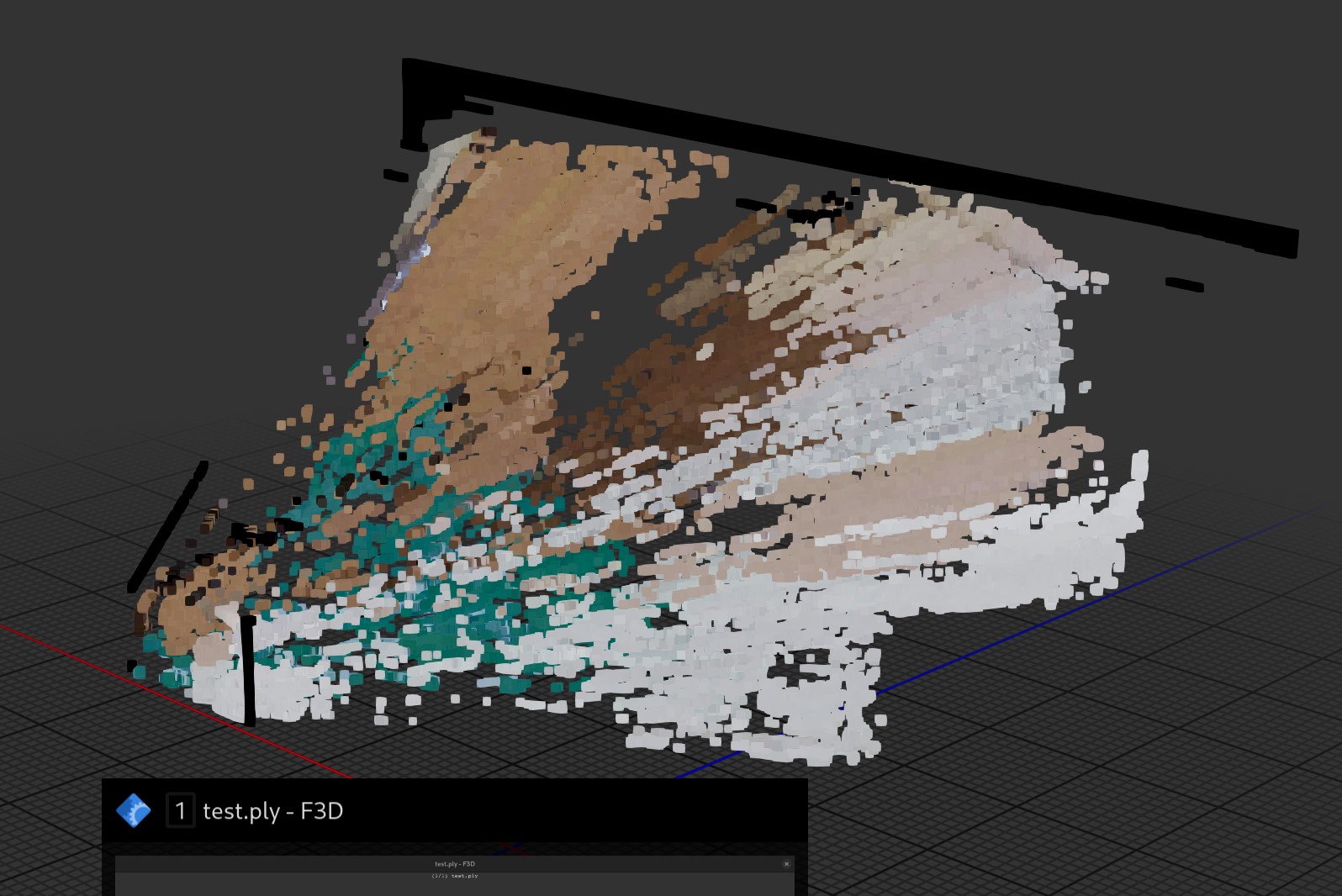

We quickly realized that the real problem with the project was the software. We ran through at least 5 or 10 repos for generating point clouds from static images, but none of them worked as intended. Not to mention how computationally expensive it was on our laptop. The closest we ever got was this:

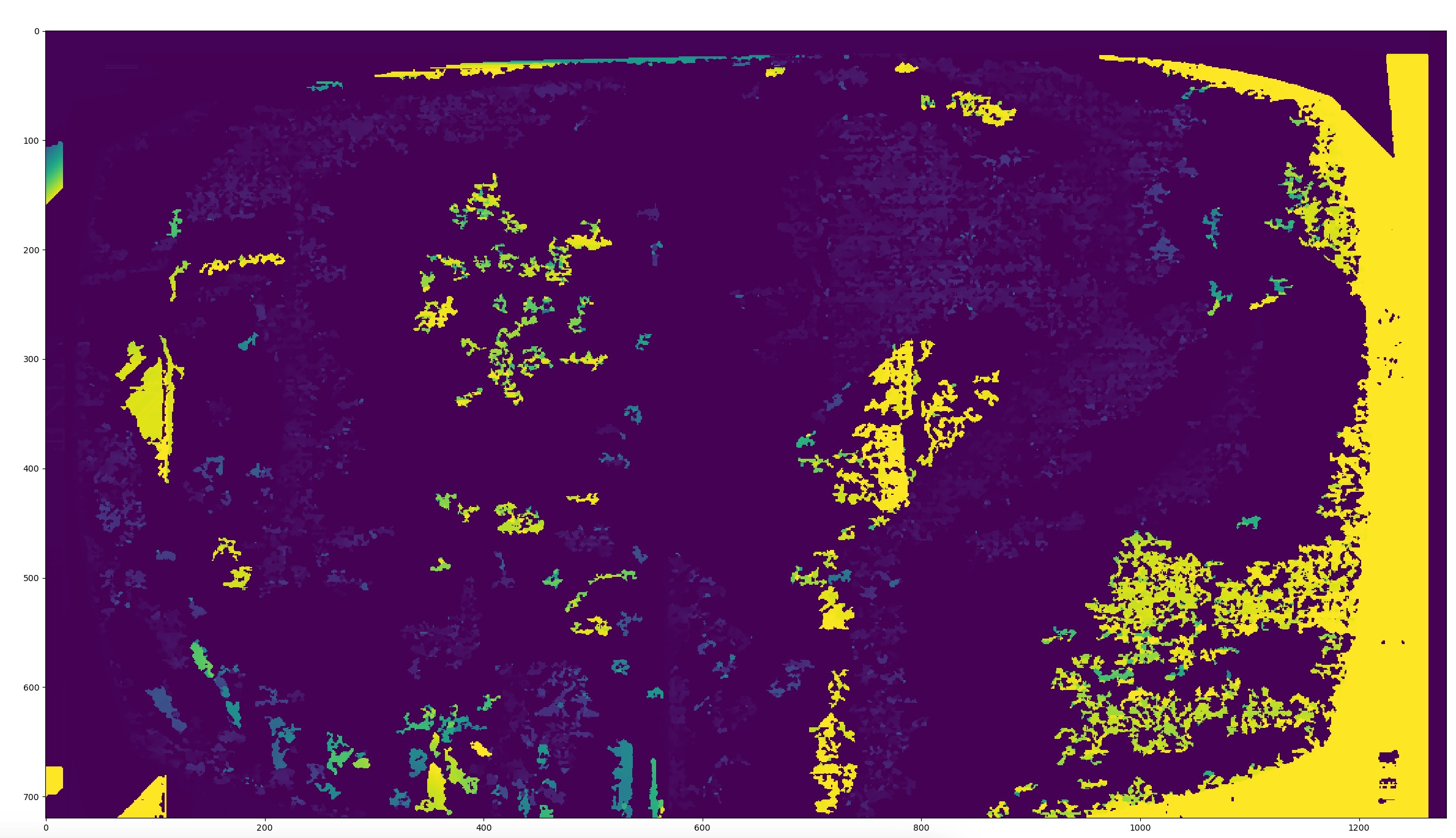

An interesting result, as you can tell. We figured that the problem was originating from our depth map, so we tried our best to explore the code and see why we were not getting a correct pointcloud. Here is what the depth map ended up looking like:

Which wasn’t at all what we were looking for. At this point, we were pretty frustrated because we had all the moving parts for the project to work: The motor assembly, point cloud concatenation method, turn table, and even a backdrop for the camera. But unfortunately, we weren’t getting anywhere without fixing the depth map.

We didn’t end up submitting the project since it wasn’t finished, but hopefully we can revive it one day. Here’s what little pictures I took before the event ended.